Reverse Proxying and Load Balancing with Nginx

4 Oct '24

Nginx ("engine x") is a a free and open-source software which was created in 2004. It is commonly used as a HTTP web server, reverse proxy and load balancer. It also has other use-cases, details of which can be found at its official site at https://nginx.org/en/. Let's dive deeper into what is a HTTP web server, reverse proxy and load balancer.

1) Web Server

At a minimum, this is an HTTP server. An HTTP server is software that understands URLs (web addresses) and HTTP (the protocol your browser uses to view webpages). An HTTP server can be accessed through the domain names of the websites it stores, and it delivers the content of these hosted websites to the end user's device. (Mozilla MDN)

At the most basic level, whenever a browser needs a file that is hosted on a web server, the browser requests the file via HTTP. When the request reaches the correct (hardware) web server, the (software) HTTP server accepts the request, finds the requested document, and sends it back to the browser, also through HTTP. (If the server doesn't find the requested document, it returns a 404 response instead.)

2) Reverse Proxy

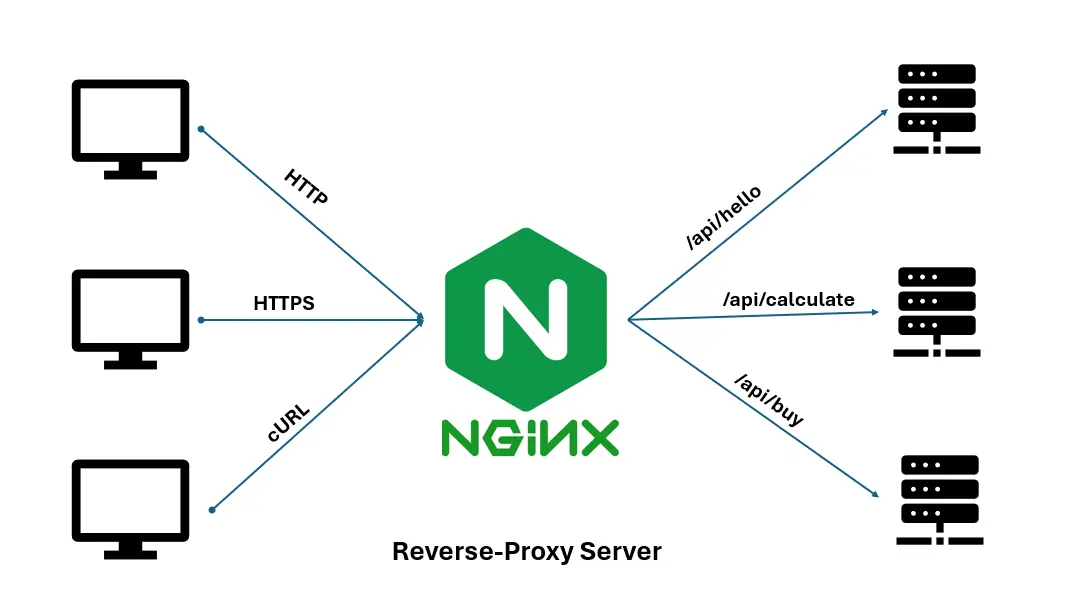

A reverse proxy is a server that sits in front of web servers. It forwards client requests to those web servers and then return the server's response to the client. While a forward proxy serves clients by routing requests to external resources, a reverse proxy serves web servers by managing client connections on their behalf. Reverse proxies increase security, performance and reliability (CloudFlare).

3) Load balancer

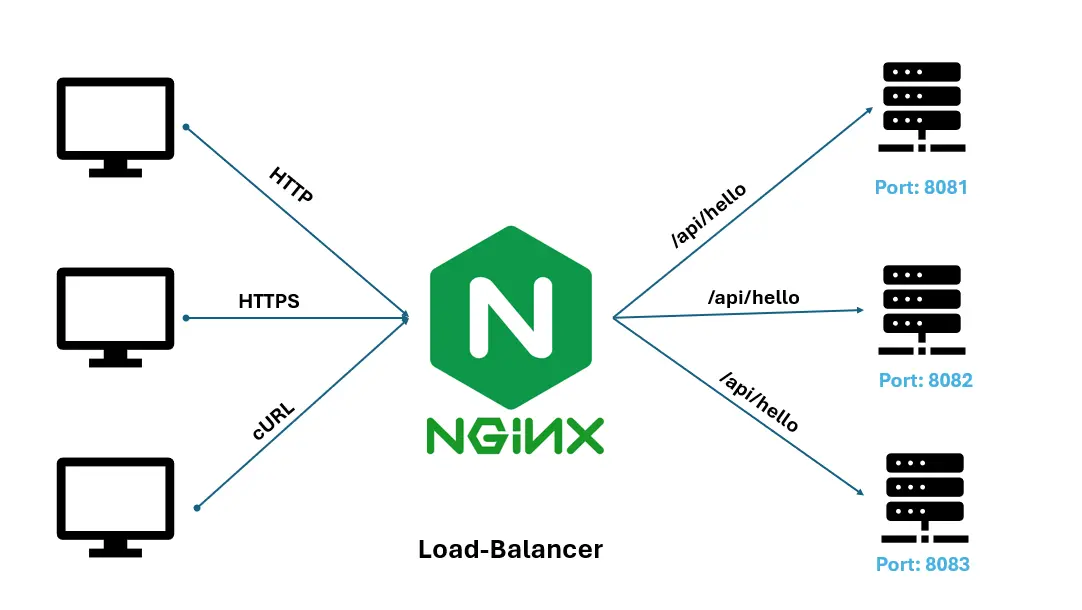

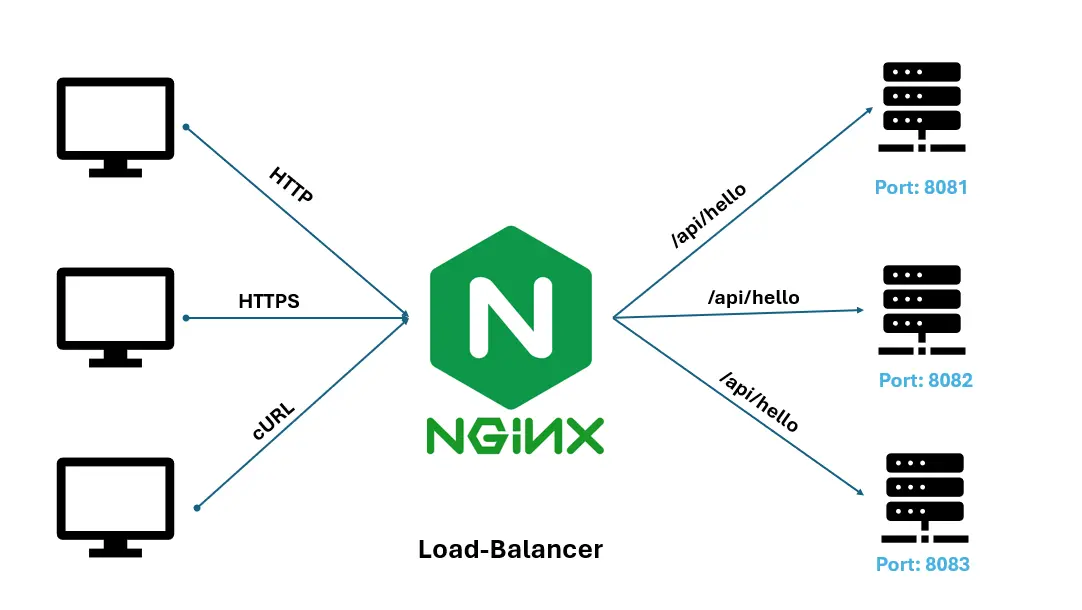

A load balancer is a device that sits between the user and the server group and acts as an invisible facilitator, ensuring that all resource servers are used equally (AWS). It can be considered as a feature of a reverse proxy. The image below shows how a load balancer works. Multiple servers with the same API endpoints are created, allowing the load balancer to distribute incoming client requests equally as much as possible. There are various algorithms for the distribution. Two of the most commonly used distribution algorithms are round-robin and least connection methods. We can treat round-robin as each server taking turns sequentially to serve content while in least connection method, the load balancer checks which server has the fewest active connections at that time and sends traffic to those servers. The result of using a load balancer is it ensures application availability, scalability, security and performance.

Setting up the reverse proxy and load balancer

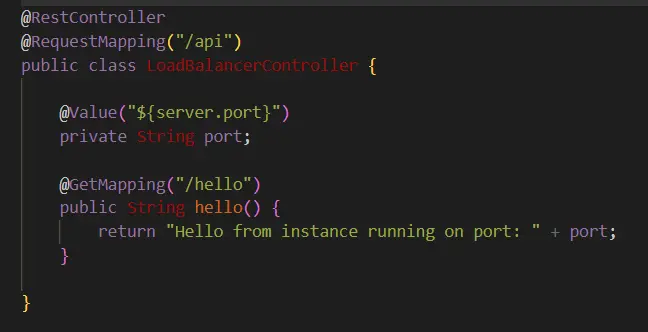

In this tutorial, we will configure a Spring Boot application to work with nginx's reverse proxy and load balancer. The application has a simple REST API endpoint which returns the port number of the application server which has been queried.

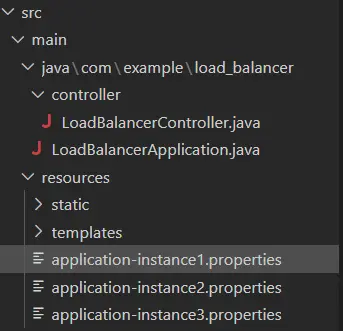

Firstly create multiple instances of the application.properties file. These files are used to launch multiple (in example below, 3) instances of the application. Inside each application.properties file, provide the port number in which the instance of the application will be launched.

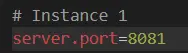

Run the instances of the application with maven with active profiles -Dspring-boot.run.profiles= instance[number]. If you use -Dspring-boot.run.profiles=instance[number], then there should be a corresponding application-instance[number].properties

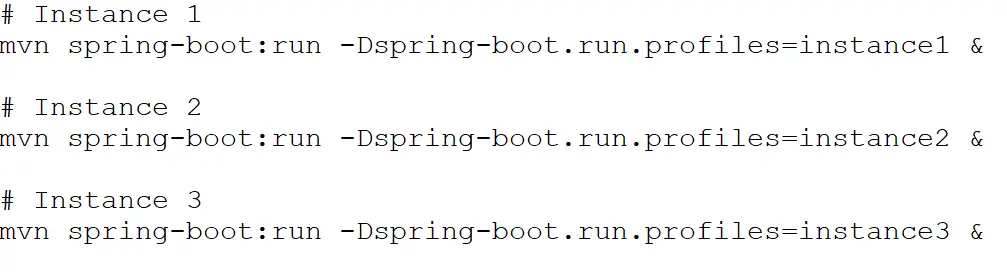

Next, we configure nginx's configuration file. The server block configures the reverse proxy. In this example, it is set to listen for requests using the default HTTP port 80. Provide the name of the server in server_name. On a local machine, this can be set to any arbitrary name. The location directive is used to specify how Nginx should respond to specific request URIs (URLs). It allows matching of different parts of the URL and defines what Nginx should do with requests that match those parts. The proxy_pass directive is used to define the backend server (upstream service) to which nginx should forward the request. The load balancer is configured in the upstream block, which indicates which servers are used for load balancing. The load balancer uses the round-robin algorithm by default to distribute traffic evenly among the servers. Save the configuration file and launch the nginx server in the terminal with the command 'start nginx'. And that is all to it. Test the load balancer by making multiple calls to the endpoint. It will return the port number of the application instance which served the response.

The video below describes the steps implemented above.

Modern applications often use Nginx in cloud environments as part of their architecture. For example , Nginx is often paired with services like AWS EC2 (Elastic Cloud Compute), Google Cloud Platform's Compute Engine, or Kubernetes to route traffic to instances or pods running the application. In AWS, Nginx is often deployed on EC2 instances or in containerised applications using Elastic Container Service (ECS) or Elastic Kubernetes Service (EKS). It can also be used with AWS Elastic Load Balancers for a hybrid approach.

Hope you have enjoyed reading this article and found it useful. Till next time...